Understanding Neural Network

What is a neural network?

Figure 1: What is a Neural Network?

The neural network is a system that mimics a human brain structure. Figure

1 presents a multi-layer feedforward Neural Network structure, which contains an

input layer (green), hidden layers (pink) and an output layer (orange). Each

layer contains multiple nodes indicated by a small circle, for example, input

nodes are in green, hidden nodes are in pink, and output nodes are in orange.

Nodes in different layers can connect to each other, and the connection

strength between two nodes is called a weight between these nodes. The

connection flow is started from the input layer to hidden layers, and then to

the output layer. Each node in a hidden layer can accept input connections from

a previously hidden layer or input layer. These input connections will be

summed and fed to a special function called activation function to form its

node's output. As a result, only hidden layers and output layer do have activation

functions. There are different types of activation functions that define the

character of the Neural Network.

In a nutshell, for a given Neural Network structure, the output of this

Neural Network can be calculated based on its inputs. The Neural Network

weights are determined from a learning process that learn a pattern or features

from a data-set. This data-set is a collection of input and target pairs.

Neural Network applications

Figure 2: Neural Network application

As a Neural Network can approximate any mathematics functions. its

applications are mainly on function approximation and classification fields.

That fields include function fitting, prediction and pattern recognition as

shown in Figure 2.

Pattern recognition example

Figure 3: Handwriting recognition example

In this example, a data-set for handwriting recognition application

contains 60000 samples of 28pixelx28pixel gray images representing the number

from 1 to9. The purpose of this example is to use image samples to train a

Neural Network to recognize number 1 to 9. As a result, the Neural Network

input will be a 28pixelx28pixel gray image and its target would be any number

(from 1 to 9) as shown in Figure 3. After being trained, the Neural Network will

have the correct knowledge to recognize the correct number from a hand-writing

28x28 gray image. In other words, the trained Neural Network will be able to

recognize a pattern in handwriting images to correctly identify them.

Function fitting/ prediction example

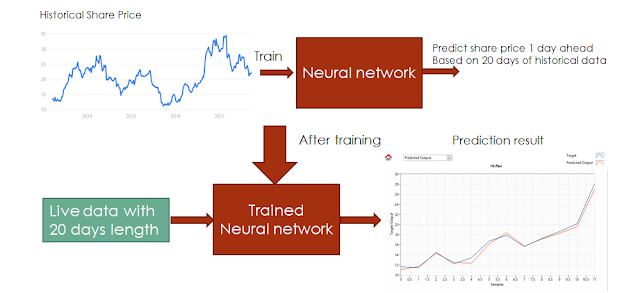

Figure 4: Function fitting/prediction example

In this example, a historical data representing a share price over time,

and this data might be a presented by a continuous mathematics function. The

future share price would be predicted if this mathematics function is known.

Unfortunately, this is not the case in a stock market. Different techniques,

refereed as function fitting, have been explored to formalize a mathematics

function based on a historical data. With learning capability, a Neural Network

is a perfect candidate for this kind of application.

Figure 4 presents a function fitting application of a Neural Network.

First, historical stock price data is used to train a Neural Network to learn

its features. After being trained, the Neural Network could predict future

prices of this stock share.

How does a Neural Network

learn?

Figure 5: How to train a Neural Network?

The ability to recognize patterns or to approximate unknown functions

thanks to the Neural Network learning capability. To teach a Neural Network, a

data-set that contains a collection of input-target pairs is required. For a

given input from that data-set, the corresponding target is the Neural Network output

expected to be. Figure 5 demonstrates the Neural Network learning process.

For a given Neural Network structure, its outputs can be calculated based

on its current weights and inputs from the data-set (outputs = F(W, inputs)).

These outputs will then be compared against targets from the data-set to form

training signals to adjust the Neural Network weights via an optimization

procedure. This process is repeated until no significant differences between

the Neural Network outputs and corresponding targets, also called errors, are

achieved. Since errors are calculated from the Neural Network output

layer and used to adjust weights from hidden layers to the input layer, this

process also refers to the back-propagation process. Through this training

process, the Neural Network will eventually learn data-set's behavior.

Commentaires

Enregistrer un commentaire